Twitter & Cryptocurrency: New scam strategy

December, 5, 2022

26 minutes read

Salvador Mendoza from Metabase Q’s R&D Team

// Introduction

Social engineering attacks are among the most difficult to combat since their modus operandi is not based on an algorithm that can be blocked by a software update. It rather takes advantage of the lack of knowledge and the trust of users (mainly new ones) to obtain passwords or valuable information about their accounts. In this blog entry, we will focus on the attacks towards virtual wallets, which are services that are responsible for managing cryptocurrencies.

With Bitcoin being one of its greatest accelerants, cryptocurrencies have become increasingly popular over time, to the point where some countries have begun to use it as a valid form of payment in local businesses. This growing popularity has continued to attract more and more users to actively participate in this cryptic market, both investing large and small amounts, as well as mining the chains of code necessary for the formation of these coins. Moreover, the growing boom has drawn the attention of cyber fraudsters who, knowing the large amounts of money that these systems handle, have been motivated to look for an easy and fast way to access the accounts of desperate users looking for technical support.

In this social network age, Twitter is characterized to be a medium through which users’ express ideas and opinions and complaints in a summarized manner. Amongst these complaints, there are comments regarding virtual wallets, which are of particular interest to scammers. Malicious users can monitor the flow of tweets that contain specific keywords through the development tools provided by Twitter, looking for tweets from people who need help with their cryptocurrencies and respond immediately by trying to deceive them through different social techniques to extract their account information.

// How the scam works

The way it works is very simple, the attacker conducts quick research on the keywords that a novice and desperate user would tweet when they need technical support, these words and phrases could be “I need help with”, “I need support with”, “I have lost my cryptos”, “they hacked my”, “they stole my,” plus the name of the respective cryptocurrency or virtual wallet service. Once these words have been identified, a bot can monitor the tweets that contain those phrases or words and respond as soon as possible with a pre-established message.

The malicious messages may vary, but, in essence, they always seek to gain users’ trust, and urging them to provide sensitive information to “solve” their problem, which then allows cyber criminals to access their accounts and empty their virtual wallets in a matter of minutes.

These messages can be classified in the following three different types:

1. Impersonation as technical support

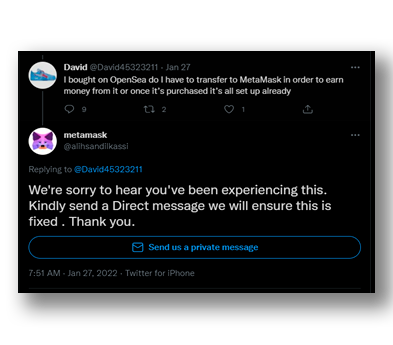

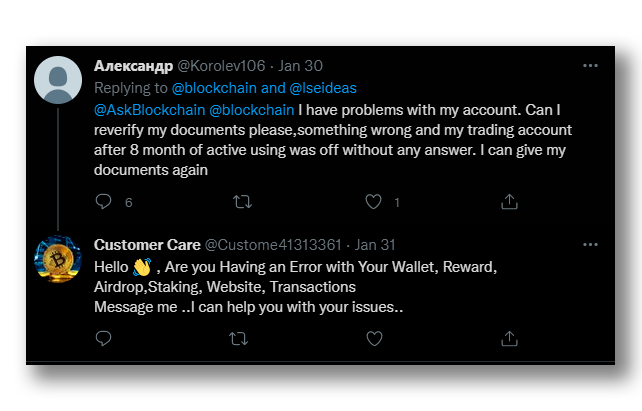

In this case, the attacker creates an account with a name, profile picture and banner identical to the official tech support account, becoming more difficult to identify whether it is a trustworthy account or not. The structure of automatic messages usually starts with an apology for the inconvenience and then saying that, to fix the problem, the user should contact them directly, so that they can give support. If this initial stage succeeds and the user decides to contact the so-called tech support directly, the attacker will try to persuade the victim to disclose their account information on the grounds that it’s necessary to “confirm their identity” to regain access. Once the wallet user reveals their password or access passphrase, is completely unprotected, and the attacker can freely empty their account quickly and easily.

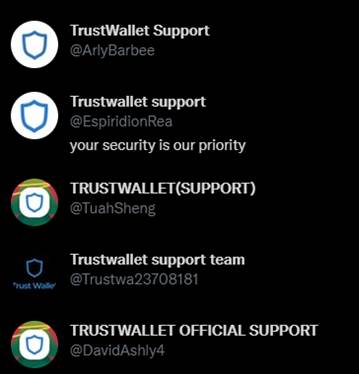

Identifying a malicious account is relatively simple. Twitter provides two principal defense mechanisms to avoid confusion. The first mechanism is that every user must have a unique username, for which, when consulting support accounts, the individual should always check if the account matches the official support service needed. As we can observe in Figure 1, is easy to identify that all those accounts are potentially malicious just by looking the username carefully.

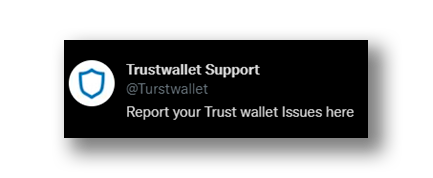

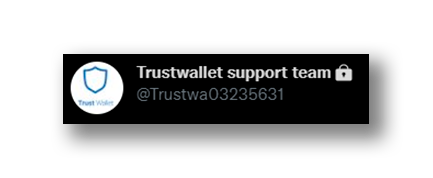

However, there are accounts that try to hide this by using similar characters or changing the position of a letter so that an unsuspecting user can be deceived more easily as we can see in Figure 2 and Figure 3.

Due to this, Twitter has a verification symbol system (Figure 4) through which it ensures that the user who has this distinctive seal is an official member of the institution to which they claim to belong. Some malicious accounts are aware of this and try to simulate this verification seal with a similar character (Figure 5).

There are hundreds of accounts that use this scam method to deceive unsuspecting users, so it is important that, if you are a user of any of these services, you can correctly identify the official accounts of social media networks to avoid these attacks.

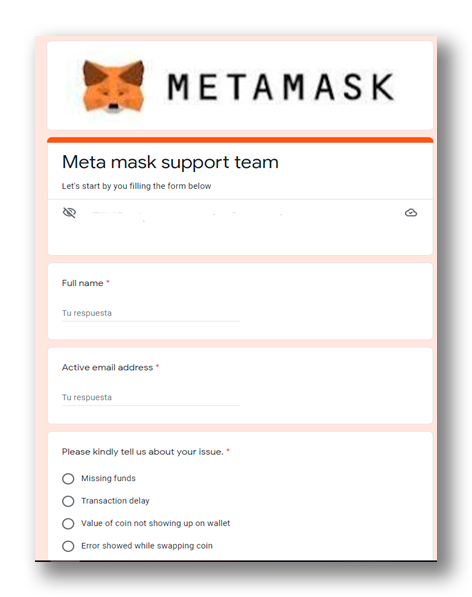

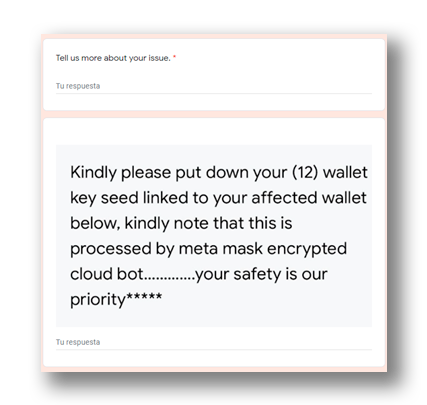

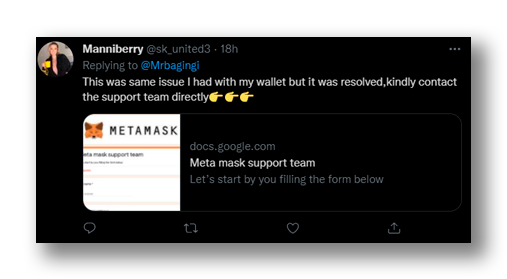

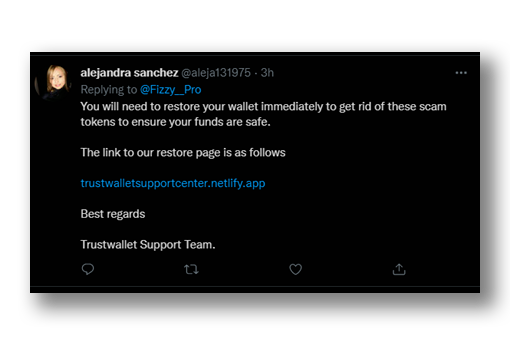

2. False Report form

In this case, the attackers pretend to be a legitimate user of same crypto wallet who had the same problem, claiming that a report must be filled out for the crypto wallet support team to review and then solve the problem(Figure 8). These tweets usually contain a link to a Google Docs (Figure 6) form that ask questions such as the user’s email and recovery key, ensuring that their data will be protected and that only the technical team will be able to access it (Figure 7).

The official wallet accounts constantly remind their users that their technical assistance services do not request passwords or security keys on other web pages or applications that are not the official ones.

3. Malicious Advice

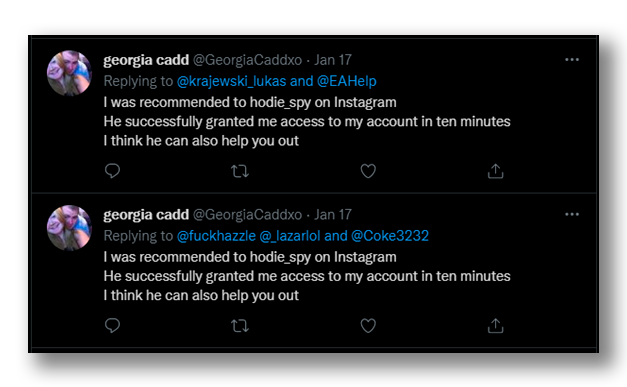

This third variant uses (apparent) normal accounts. They leave an automatic comment under the target users’ tweets, explaining that they went through the same trouble to gain empathy and trust. Then, they suggest contacting other Twitter or Instagram accounts that ensure that they can recover their hacked account and solve their issues (Figure 9).

When contacting these hackers, they will ask for the basic minimum information to gain access to the accounts and, if succeeded, the user’s account will be completely exposed and vulnerable. In essence, this attack is very similar to the first, where the attacker pretends to be an expert with the willingness to help, extracting all possible information and then using it in their favor.

Despite being very simple and easy to identify that type of attack (it is essential to not share personal password or recovery keys in unsecure channels), requesting that information is one of the methods most used by cyber scammers.

// Security measures against these attacks

One of the best user tools against social engineering attacks is strengthening their cybersecurity common sense. All automatic message attacks tend to use a generalist language using phrases like “dear user,” or “sorry, mate,” so that it’s likely that most users, both new and experienced ones, will immediately realize that something is not right with the supposed advice they are receiving. Nevertheless, when it comes to money and the possibility of losing it, many users tend to get nervous and make mistakes that can lead to serious consequences, so there is a necessity of tools that miticate the attack incidence.

It should be noted that scam account behavior is strictly prohibited by Twitter’s rules of use. Therefore, if the Twitter algorithm detects potentially dangerous comments, it hides them, and it is the user’s decision whether they want to see those comments or not (Figure 10).

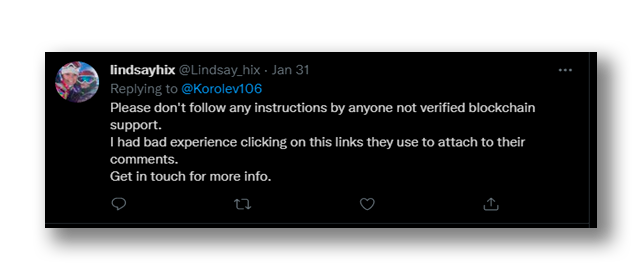

There are more experienced users who are aware of these threats and create tweets full of keywords that trigger these bots to comment (Figure 11) and, thus, be able to detect scam accounts and report them so that they can be suspended. Despite the reports and the constantly suspended accounts, this type of attacks is still being perpetrated. Aside of these, there are not many countermeasures for this kind of attacks.

// Twitter API

The applications that generate autoresponders, also known as bots, are generated through the Twitter Application Programming Interface (API), where users can find tools that allow the developer to perform searches or give preconfigured responses in a matter of seconds.

The Twitter API supports various programming languages and is one of the most permissive in terms of the actions it allows to perform. In this case, for research purposes, we focused on the Python programming language, specifically to use Tweepy , a library that considerably facilitates the use of the Twitter API. Both tools are free and easy to get.

Twitter is aware that an application created with this API can be problematic, so to get access, it is necessary to create a “developer account”, and request access by filling out a form. In this process, the users are asked which functions of Twitter they will use in their application and what is the main intention of their application. This request is manually reviewed by the Twitter team and, depending on their criteria, the permission is either granted or not. The most basic developer account is limited to having one application at a time, and limited access to the amount of information (500k tweets per month in the most basic case).

At first, it seems to be an effective system to prevent applications from being excessively generated by any user. However, nothing guarantees that the applicant is telling the truth.

Once the users have access to this API, they are allowed to create applications, which provide secret keys that give the application access to Twitter tools. When the connection is established, the functions of Tweepy allow to obtain the public information of one or several users, their timelines, their followers, their favorite tweets, etc. Also, it can track certain keywords in streaming and get statuses in real time. The status has several attributes from which relevant information can be obtained:

- Created_at: provide a string value with UTC time when this Tweet was created

- Id: representation of the unique identifier for this Tweet

- Text: the actual UTF-8 text of the status update

- Source: utility used to post the Tweet, as an HTML-formatted string

- n_ reply_to_status_id: if the represented Tweet is a reply, this field will contain the integer representation of the original Tweet’s ID

- In_reply_to_user_id: if the represented Tweet is a reply, this field will contain the integer representation of the original Tweet’s author ID: This will not necessarily always be the user directly mentioned in the Tweet

- Coordinates: represents the geographic location of this Tweet as reported by the user or client application. The inner coordinates array is formatted as geoJSON (longitude first, then latitude)

- Place: when present, indicates that the tweet is associated (but not necessarily originating from) a place

- Is_quote_status: indicates whether this is a Quoted Tweet

- Quoted_status_id: this field only surfaces when the Tweet is a quoted Tweet. This field contains the integer value Tweet ID of the quoted Tweet

- Quoted_status: this field is only present when the Tweet is quoted. This attribute contains the integer value Tweet ID of the quoted Tweet

- Retweeted_status: Users can amplify the broadcast of Tweets authored by other users by retweeting. Retweets can be distinguished from typical Tweets by the existence of a retweeted_status attribute. This attribute contains a representation of the original Tweet that was retweeted.

- Retweet_count: Number of times this Tweet has been retweeted

- Favorite_count: Indicates approximately how many times this Tweet has been liked by Twitter users

- Possibly_sensitive: This field only surfaces when a Tweet contains a link. The meaning of the field doesn’t pertain to the Tweet content itself, but instead it is an indicator that the URL contained in the Tweet may contain content or media identified as sensitive content

- Filter_level: Indicates the maximum value of the filter_level parameter which may be used and still stream this Tweet. So, a value of medium will be streamed on none, low, and medium streams

- Lang: When present, indicates a BCP 47 language identifier corresponding to the machine-detected language of the Tweet text, or und if no language could be detected. See more documentation here.

- User: The user who posted this Tweet

Some of these attributes may not be enabled in the Twitter account and may not provide the requested information. Some others are reserved solely for the use of an enterprise application. The user object also has its own attributes from which even more information can be obtained, such as:

- Id: The integer representation of the unique identifier for this user. This number is greater than 53 bits and some programming languages may have difficulty/silent defects in interpreting it

- Name: The name of the user, as they’ve defined it. Not necessarily a person’s name. Typically capped at 50 characters, but subject to change

- Screen_name: The screen name, handle, or alias that this user identifies themselves with. Screen_names are unique but subject to change. They typically contain a maximum of 15 characters, but some historical accounts may exist with longer names

- Location: The user-defined location for this account’s profile. Not necessarily a location, nor machine-parseable. This field will occasionally be fuzzily interpreted by the Search service

- Url: A URL provided by the user in association to the profile

- Description: The user-defined UTF-8 string describing their account

- Protected: When this attribute is true, it indicates that this user has chosen to protect their tweets

- Verified: When this attribute is true, it indicates that the user has a verified account

- Followers_count: The number of followers this account currently has. Under certain conditions of duress, this field will temporarily indicate “0”

- Friends_count: The number of users this account is following (AKA their “followings”). Under certain circumstances of duress, this field will temporarily indicate “0”

- Favourites_count: The number of Tweets this user has liked in the account’s lifetime. British spelling is used in the field name for historical reasons

- Statuses_count: The number of tweets (including retweets) issued by the user

- Created_at: The UTC datetime that the user account was created in Twitter

- Withheld_in_countries: When present, it indicates a list of uppercase two-letter country codes this content is withheld from

The amount of information that the API can obtain with a single Tweet is plenty. Once the common structure of the Tweet is identified, the user would want to follow in the stream. It is easy to give an automatic response with the tools that Tweepy offers.

// Modus Operandi

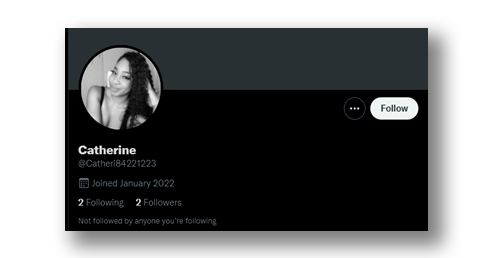

Starting with one of the most basic strategies, the attacker creates a new account with a fake profile name and photo. As part of their strategy to reduce suspicion, they even tweet and add photos in the timeline, they also could start following people, giving the organic feeling that they are authentic profiles (Figure 12). Once they’ve created their façade, the attackers request their access to the API by lying in the Twitter forms. This permission can take a couple of days depending on the reason presented, in the meantime, the simulated profile can continue to be built. However, many others simply leave their profile empty.

With the API obtained, the malicious user runs the automatic response application, and it automatically finds and responds to comments asking for help. It is at this point is where the strategy diversifies the most. Some variants could be to use links of false Google docs, suggest sending an email to false addresses, look for an external account of an “expert and ethical” hacker who will recover the account or fix the problem, asking to send a direct message, etc. Identifying this type of message as a threat is not complicated, since the tweets are usually very suspicious, and it is enough to enter the twitter profile to immediately identify that it is false.

However, there are variations that can increase the chances of a successful attack, for example, at first, one might think that all malicious accounts are newly created due to their volatile nature, but, upon further investigation, malicious accounts were found with more than ten years old (Figure 13).

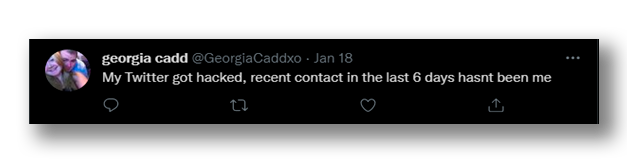

One possibility is that the attacker had an old account and simply refurbished it to function as a bot. On the other hand, some users have reported that their accounts have been taken over (Figure 14), being used to perpetrate the scam from different angles without the need to create accounts or create a convincing façade (Figure 15).

These types of accounts are dangerous because they are difficult to identify at first sight since they look like a common profile. If the message is convincing enough, it can bypass Twitter filters and avoid raising any kind of suspicion, so the profile should be carefully reviewed to determine if it is dangerous.

Accounts posing as support systems are also a common type of account in this type of scam, stealing identity of official accounts with a false name, profile picture, and a header photo. The way in which they operate is very simple.

They select a cryptocurrency wallet service, go to the official social networks, and copy every possible detail in their fake profile. Once this step is completed, the same procedure seen above is followed, where automatic replies are posted to tweets from users asking for help but being focused only on the users of the selected account. The automated message usually poses as a member of the support team and asks the user to send a direct message to the account to receive instructions on how to fix the problem (Figure 16).

If the user is deceived, the attacker will use social engineering tools to obtain the information necessary to gain access to the crypto wallet account and transfer all the money to their own accounts. The level of difficulty in identifying these accounts can vary greatly depending on the amount of effort that has been done into duplicating the account.

Another strategy use links to external pages (Figure 17) that show a name like the requested service. However, if the user reads it carefully, the real domain of the page is not trustworthy. Clicking on an untrusted link is dangerous, as not only is the user’s information compromised, but it can also compromise the entire computer with the installation of malware.

Some other strategies are to be aware that scam bots and design their response around that knowledge (Figure 18), so that the message is more trustworthy and more likely to work. If the user is not careful, these types of messages can be very difficult to detect.

Other attackers decide to directly monitor responses posted from official accounts (Figure 19), searching for tweets requesting help. Once the tweets have been identified, they reply to the victims’ message, giving the feeling of being more trustworthy

These last three strategies represent the most dangerous threats of all the variants that exist, since they are the ones that require the most effort on the part of the attackers. In addition, they are more difficult to detect since their behaviors can vary and bypass the Twitter filters. Thus, our defense strategy will focus on these.

It should be noted that Twitter actively blocks dozens of bot accounts daily, but to be able to do that, users must manually report the accounts.

// Searching for malicious users

The Twitter API has different uses, there are currently two versions, so the amount or type of information that can be accessed depends on the type of account available and the authentication method used (which determines the API version). In this case, Metabase Q research and development (R&D) team got access to an elevated developer account. With this account, we could made up to 300 requests every fifteen minutes, each request could get a maximum of 100 Tweets, reaching 30000 Tweets, and with a total limit of two million Tweets per month.

Analyzing the modus operandi of Twitter scammers, we know that the account creation date is not a reliable parameter to determine if an account is real or not, nor can we trust the number of Tweets, response time, followers, friends, profile picture, or even the name, as all these factors are variable. For this reason, creating an application that identifies all the malicious accounts within Twitter is difficult. In this case, we decided to focus on tweets that employ more aggressive strategies. This increases the probability that the detected tweets and accounts are indeed malicious.

In the previous sections, we mentioned the properties that a tweet and a user are accessible through Twitter’s API v1 and, if we observe carefully, there is no parameter that allows us to obtain the answers to the tweets, it can only show us if the found tweet is a reply or not. However, just over two years ago, Twitter released a second version of the API that contains more properties and allows us to access more information. With API v2, there is a property called “fields,” which contains all the information provided by API v1 plus new properties, such as the “conversation ID.”

With the conversation ID property, it is possible to get all the tweets that have been published as replies to the main tweet, and even the replies to the replies. Once all the tweets have been obtained, they can be filtered with search parameters such as keywords or links, if applicable. Through the filtered tweets, the API provides access to all the tweet’s account’s public information, such as its screen name, number of followers, timeline, etc. Then, all this information can be stored.

The Tweepy library has been updating its functions to cover the functionalities offered by the Twitter API v2. To this day, the functions related to streaming tweets are missing, so any algorithm built with this library, using API v2, can only access the Tweets history.

// Search Implementation

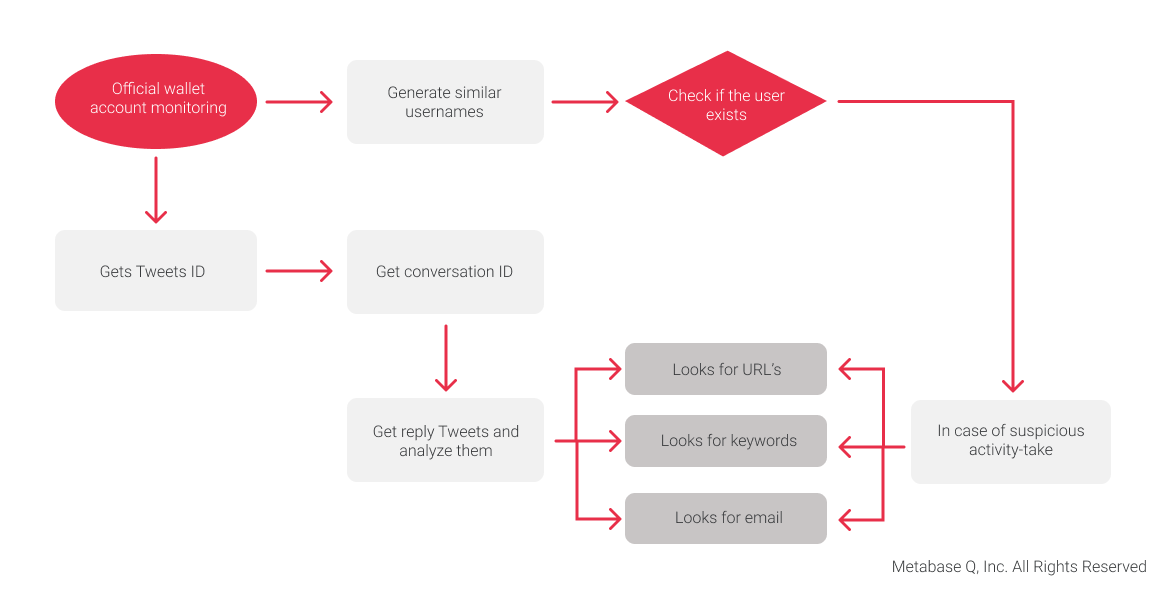

To tackle the problem, the Metabase Q team developed an algorithm (Figure 20), which we´ve named “Spotter”, capable of searching through the most recent tweets form various official virtual wallet accounts and filtering out suspicious tweets that have certain keywords. In addition, Spotter integrates Tweeptwist , a tool developed by Banbreach company, which is an algorithm that creates similar Twitter usernames and checks if they exist.

These two strategies allow us to cover a wide range of potentially dangerous accounts used by Twitter scammers. All tweets and suspicious accounts are stored in a database with all the necessary information to determine if they are malicious accounts or a “false-positive.” Tweets that do not pass the filter are also stored to verify the “false-negatives,” and, thus, improve the accuracy of the filters.

Every fifteen minutes, Spotter will take a name from the list (in order), and, with using the Twitter API v2 and the Tweepy library, the last ten tweets of the official account are obtained, allowing us to search for all the tweets that have responded to the thread, including the replies to the replies. In a first search, all the tweets that have this conversation ID are stored in a database table using Structure Query Language (SQL) with Python extension SQLite3. The saved data is:

- Conversation ID

- Username

- User ID

- Tweet date

- Tweet text

- Tweet ID

- ID of the user replied to

On a second search for tweets with the same conversation ID, only tweets with keywords are stored. A problem that we encounter is that many of the words used by scammers are also used by real users asking for help, so this represents a hard problem to solve with this simple filter. As a provisional solution, we only register the tweets that are not replying to the official accounts, only the replies to the users asking for help. This strategy reduces in a not negligible way the false-positive ratio. Spotter can work for a long period searching for tweets repeatedly for the accounts on the list, so it is possible that many repeated tweets will be obtained again in the search. Therefore, each tweet ID obtained is searched within the database. If they are detected in the database, then they are simply ignored and the search continues for new tweets, thus avoiding duplication.

The second part of Spotter uses the Tweeptwist tool to look for usernames that have tiny variations in their structure. It uses the Twitter API v1 and its latest version was published in 2018. Since then, the API has had several changes. On the other hand, the original code was configured to work as a command and not as a function, so the structure of the Tweeptwist had to be modified to work properly, to be included in the main code, and to allow Spotter to handle the information obtained.

With this fixed and functioning, Tweeptwist takes the name of the official account from the list, and, through different techniques, generates a list of possible fake names that could be used as accounts on Twitter. Then using the API v1, it finds if the user exists, returning only existing users. Moreover, this list also obtains information such as the number of friends, followers, or tweets that the account has.

From the resulted list, the users of most interest are those who are active on the social network, therefore, those who have recently published tweets are selected. With the use of API v2, Spotter obtains every user’s last Tweets (within a period of 7 days’ time) and, if they contain any keyword, the user is considered active.

All accounts in the list are stored in another table of the database, despite being active or not. As with the Tweets, we avoid duplicating information by checking the user ID within the database.

Lastly, to prevent the Twitter API cap from being exceeded, Spotter pauses for fifteen minutes and restarts its process with the next user on the list. When the last user on the list is reached and there’s still time left, the list restarts.

// Experimental Test

For Spotter validation, a two-hour test with a list of eleven official cryptocurrency wallet accounts was performed:

- “Blockchain”

- “AskBlockchain”

- “Metamask”

- “MetaMaskSupport”

- “TrustWallet”

- “TrustWalletApp”

- “Binance”

- “BinanceHelpDesk”

- “BinanceX”

- “Coinbase”

- “CoinbaseSupport”

In this specific order, we search for the last ten tweets and look for the replies. As mentioned before, Spotter obtains each conversation ID and performs two searches. The first one obtains the last 100 reply tweets (because of API limits) without any filter, and then stores them in a database table. The second search obtains the last 100 reply tweets that contain the next keywords

- Support

- Join

- Fixed

- Assist

- Reaching

- Helped

- Chat

- Sorry

- DM

- Direct

- Message

- Help

- Reach

- Write

- Contact

As a result of our investigation, we found that these words are the more commonly used by scammers in their messages. All obtained tweets are stored in another table of the same database.

At last, Spotter runs Tweeptwist to look for similar usernames, and then it registers every existing user (that’s not an official account) and investigates their last tweets. The methodology remains the same, if the account is active (has tweeted in the last seven days) and the tweets contain any keyword of the filter, it is tagged as “active.” Finally, all these accounts are registered in another table of the database.

At this point, Spotter would take a 900 second delay (fifteen minutes) and wait to repeat the same process with the next official account until the time is over.

// Results

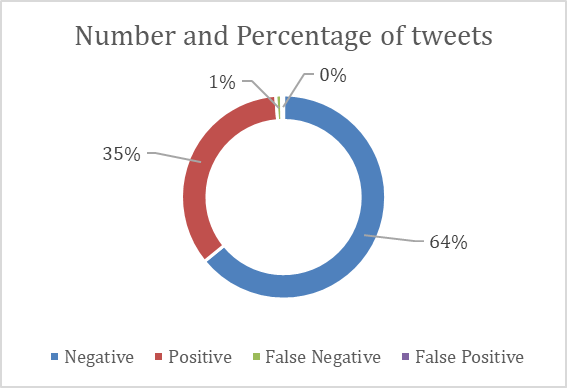

The last test was performed in February 28 and in only two hours it reached about 10,246 tweets. Spotter got hold of 6,652 tweets marked as negative (meaning they didn’t contain any keyword) from which then they obtained a false-negative ratio of 1.44%. Furthermore, 3,594 tweets were marked as positive (meaning they contained at least one keyword) with a false-positive ratio of 0.9% as we can see in Figure 21. These percentages do not consider spam tweets. Unfortunately, many spam tweets also have the same keywords as the scam tweets.

In Figure 22, we can observe the quantity of tweets per account registered in the test, with this information we can observe how much frequent this kind of attack is for certain crypto wallets. Also, we can conclude that there is more incidence in the main accounts more than in the support accounts.

For similar usernames search with Tweeptwist, from 125 accounts, only five were active and one turned out to be a false-positive.

// Discussion

Spotter is reliable to find many tweets that pretend to steal cryptocurrencies with low levels of errors, and it can work for long periods. This tool could be used to reduce the risk of falling into this type of scam.

// Limitations

There are many limitations in Twitter API that makes the implementation of an effective search algorithm harder. One of those limitations is the number of conditions that can be used for the filters (maximum of 20 conditions for the elevated developer account) so, it is necessary to select cautiously the keywords used.

Another encountered problem is that the filter only registers the tweets’ replies, therefore it could not reach every scam tweet in the conversation, and it would be challenging to differentiate these tweets from real tweets asking to help only using the Twitter API filters.

// References

Twitter bots pose as support staff to steal your cryptocurrency, Lawrence Abrams, Dec. 7, 2021. https://www.bleepingcomputer.com/news/security/twitter-bots-pose-as-support-staff-to-steal-your-cryptocurrency/

Tweeptwist, banbreach, Apr. 28, 2018. https://github.com/banbreach/tweeptwist

Tweepy, Joshua Roesslein. 2022 https://docs.tweepy.org/en/stable/index.html

Twitter API Documentation. Twitter, 2022. https://developer.twitter.com/en/docs